Tags: #AI

References:

Graph Convolutional Networks —Deep Learning on Graphs

Smarter.ai - Graph Convolutional Networks: Implementation in PyTorch

Notes for Stanford CS224W

Basic Concepts

For Undirected graph, we define the node degree

For Directed graphs have directed links (e.g. following on Twitter). We define the in-degree

Introduction

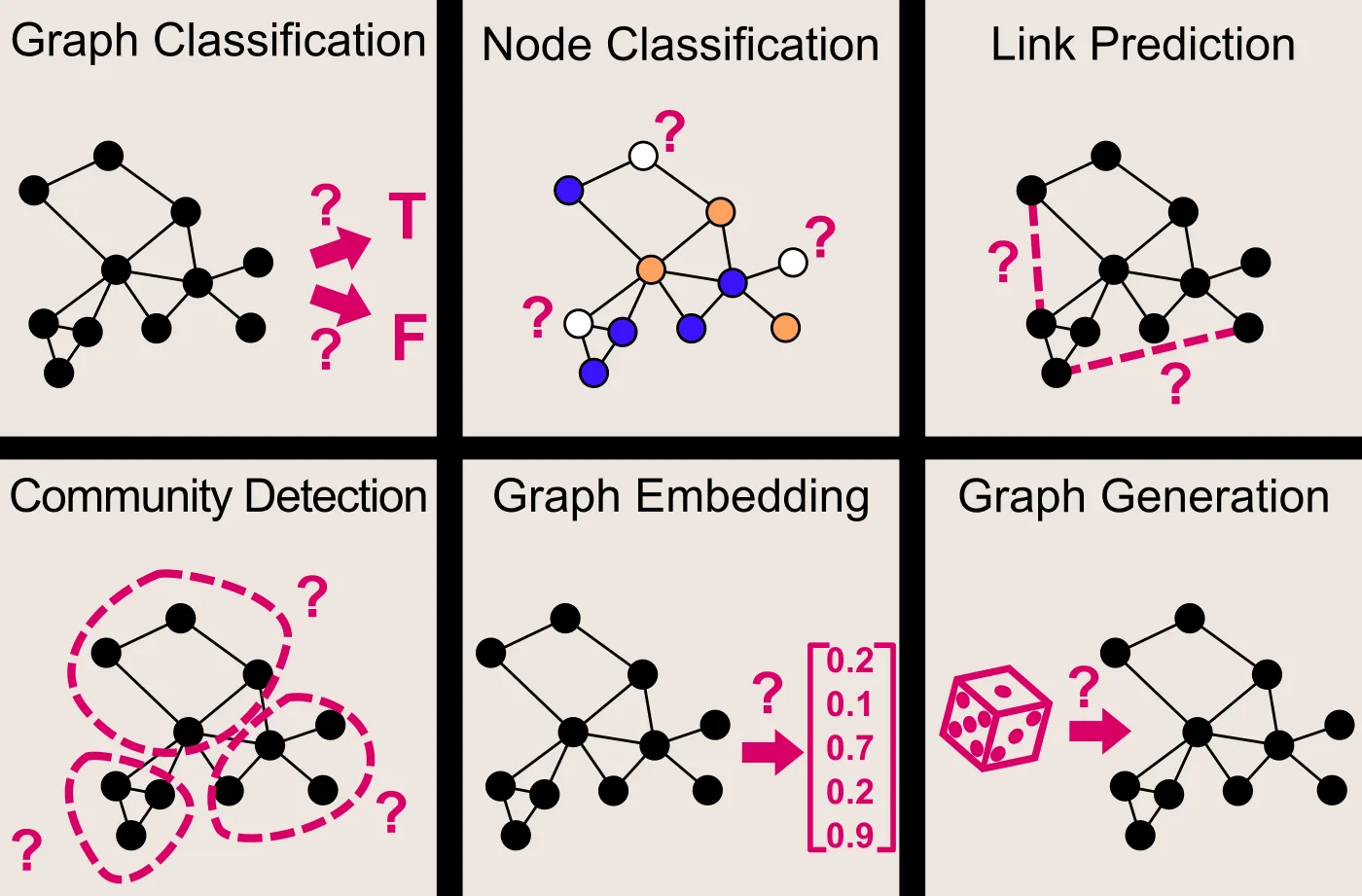

We've worked on graph classification, node classification, link prediction, community detection, graph embedding, and graph generation. These involve predicting a graph's class, classifying nodes, predicting connections, clustering nodes, preserving information in a vector, and generating new graphs.

Traditional convolutional methods in graphs is meaningless.

⇒ Graph convolution

Definition & Methods

In the case of a GCN, the inputs are:

array, for each of the nodes of the graph, features. adjacency matrix.

GCN Operation

From Zotero: Semi-Supervised Classification with Graph Convolutional Networks

: A vector representing the features of a node in the graph. : The graph structure, represented as an adjacency matrix. The entry of the matrix is if there is an edge from node to node in the graph, and otherwise. : The matrix of eigenvectors of the graph Laplacian matrix , which is defined as , where is the diagonal matrix of node degrees. : This is a diagonal matrix of the eigenvalues of the Laplacian matrix . : The output of the GCN operation, which is a transformed version of the input features .

GCN Layer

is the representation of node at iteration . This is the updated node representation that we are computing. is the set of neighbors of node , including itself. This is the neighborhood of node that we consider in the GCN layer. and are the degrees of nodes and , respectively. The degree of a node is the number of edges incident on it (edged directly connected to a node). The term is a normalization factor that ensures that the updates are scaled appropriately based on the degrees of the nodes. This is known as the symmetric normalization. is a weight matrix that is learned during training. This matrix is used to transform the feature representations of the nodes. is the representation of node at iteration . This is the previous iteration's node representation. is the transformed representation of node at iteration . This is the result of applying the weight matrix to the node representation. is the contribution of node to the updated representation of node . This term represents the normalized message sent from node to node . is the sum of the normalized messages sent from all neighbors of node , including itself. This sum represents the aggregated information from the neighborhood of node . is a bias term that is learned during training. This term is added to the aggregated information to produce the final updated representation of node .

ChebConv

From Zotero: Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

However, without further assumptions, such a filter does not have a compact support, and moreover learning all the components of

This reduce the learning complexity to

Related Works

PyG

pyg-team/pytorch_geometric: Graph Neural Network Library for PyTorch